Crontab Deleted by Mistake: How to Recover from ‘crontab -r’

Years ago, I learned the power of command-line mistakes the truly hard way when I ran the mother of all destructive commands: rm -rf. I refuse to believe (but can’t remember if it was actually rm -rf / or maybe I instead nuked a system directory) but it was equally bad. Let’s just say it was a lesson the effects of which you never forget! That particular self inflected blow was painful at the time, but the silver lining is that I’ve taken extra caution ever since. Well, at least when it comes to the rm command.

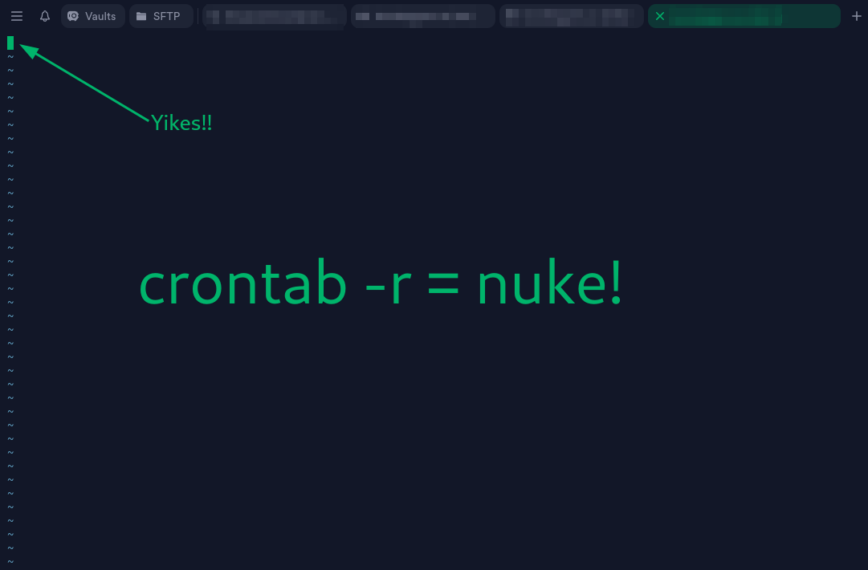

crontab -r Mishap

Today, I managed to relive a bite-sized version of that disaster when I intended to edit a crontab with crontab -e on a database server but, in a moment of pure mental autopilot, typed crontab -r then hit enter.

Oops! No server meltdown this time, but I did blow away all the scheduled cron jobs contained in the server’s crontab file, in one fatal keystroke. Here’s how it happened and how I got things back in working order.

It was supposed to be a routine maintenance session for a client’s server. I hopped on, ready to add a single line in the crontab. My fingers, however, had a mind of their own. Instead of typing -e, they produced -r. In the blink of an eyr, eye (jk!) the crontab disappeared into the void, taking all scheduled tasks with it. Imagine that feeling. It took me about 60 full seconds to realize there was no quick recovery from this.

How I Recovered my Crontab Jobs

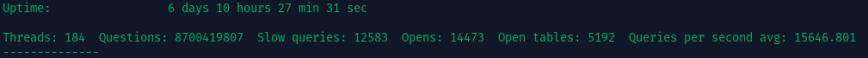

So, fortunately, there are system backups, but the full system backups are nightly snapshots. Only the web files and databases are incremental backup that be accessed without reverting the full system to the previous day or, launching a new instance from the snapshot. The server in question isn’t massive, but it averages a fair amount of database activity. As such, reverting to a system backup would be a last resort.

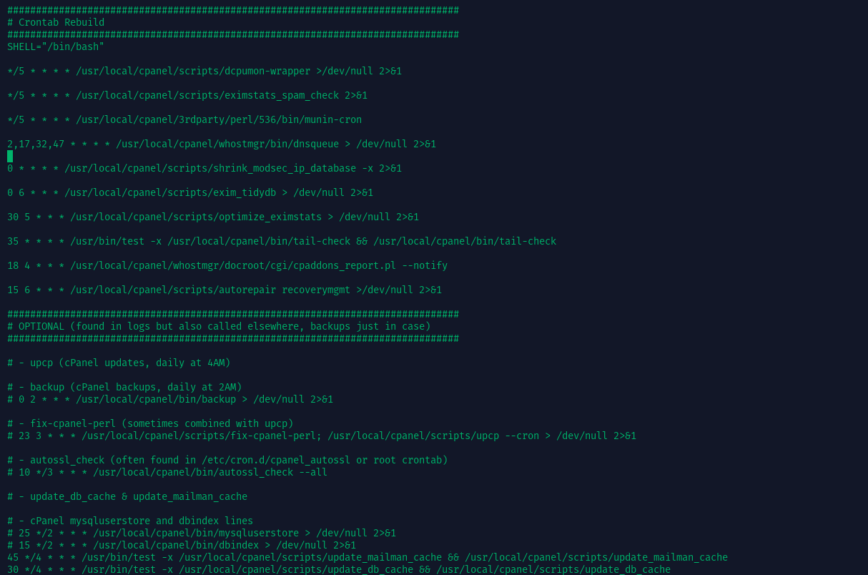

The recovery process:

- Check System Backups

Like in my case, the first thing to do is see if you have backups of/var/spool/cron/or relevant system files. If your backup strategy includes these directories and can seamlessly restore them, you should be all set. If not, read on. - Sift Through Logs

I rancat /var/log/cron. On some systems check/var/log/syslog. Every cron job that runs leaves a trace, so I pieced together the scheduling commands from the log entries. This is a lifesaver when you have no direct backups and far better than guesswork. - Comparing With Similar Servers

I also cross-referenced a couple of other servers’ crontab contents that have similar setups. Often, similar systems with cron tasks have consistent scheduling patterns. This cross-check helped validate I was reconstructing each job accurately. - Recreated the Crontab

With the puzzle pieces in hand, I rancrontab -e(carefully!) and rebuilt the entire job list, line by line. After saving, I rancrontab -lto confirm everything was correct, then restarted cron to ensure it picked up the new schedule.

Crontab restored successfully

How to Avoid This Mistake?

Once you run crontab -r, the crontab file is physically removed from the system’s spool directory (e.g. /var/spool/cron/) for that user. The cron daemon notices almost immediately—there’s no permanent “in-memory” queue that would keep your old jobs running until a service restart. So, by the time you’ve typed crontab -r, all scheduled tasks for that user are effectively gone.

Also, cron reads and schedules jobs from the crontab files at intervals; if the file is gone, the daemon removes the corresponding schedule. There isn’t a separate place still holds the old schedule in memory or otherwise. Once the spool file is deleted, cron can’t see those jobs anymore. Even if you haven’t restarted the crond service, your old crontab is already gone. Sorry!

If you need to reconstruct it, logs or server backups are your only options. At least that I’m aware of.

1. Back Up the Crontab

Always make a quick backup before editing or removing crontab.

crontab -l > crontab-backup.txt

If you do slip up, you can recover with:

crontab crontab-backup.txt

2. Backup using alias

You can set up a simple alias like this in your ~/.bashrc:

This creates a directory ~/crontab_backups if it doesn’t already exist, backs up your existing crontab with a timestamp in the filename (e.g., crontab-20250309_122314.bak) and then launches the usual crontab editor (crontab -e).

3. Alias or Shell Safeguards

Create an alias or wrapper script so that typing crontab -r either prompts you or is disabled entirely. Just be sure you know how to override it when you really do mean to remove the crontab. So something like this:

Add this line to your ~/.bashrc or ~/.zshrc:

alias crontab-r='echo -n "Really delete your crontab? (y/n)? "; read ans; if [ "$ans" = "y" ]; then crontab -r; else echo "Canceled."; fi'

Now, whenever you type:

it will prompt you:

Really delete your crontab? (y/n)?

If you type y, it proceeds with deletion; otherwise, it cancels.

Note: After you update your shell startup file, run source ~/.bashrc (or the equivalent for your shell) or log out and back in so the alias changes take effect.

4. Muscle Memory Caution!

The best defense is to slow down when typing commands in a privileged shell. Double-check your flags; especially if you’re in the habit of quick, repetitive keystrokes.

Conclusion

While this wasn’t as catastrophic as my old “rm -rf /” mistake, any accidental file removal can be nerve-racking. The important takeaway is that mistakes happen. Even to those of us who’ve learned a few lessons the hard way. With a solid backup plan, detailed logs, and a bit of muscle memory caution, these slip-ups can be turned into manageable detours rather than disasters.

And yes, I’m definitely triple-checking my commands from now on. Lessons learned, again!

So that took an hour out of my morning! Has anyone else had this happen to them before? What’s the worst oops moment that you’ve had? Any suggestions or tips?

uh that’s bad.

I think timeshift is a good way to protect yourself against this.

My worst mistake?

In the early 2000s I bought a DVD burner and wanted to back up my hard disk. When I was installing the DVD drive, I dropped the hard disk.

What can I say, a backup was no longer necessary…

Hours of programming work were simply gone, forever.

Thanks @hydn for explanation.

I don’t remember which was for me one of worst oops moment, but I’m pretty hasty

guy and sometimes I don’t keep the correct attention while doing critical operations

I would say one of most efficent way for manage disaster is having many backup incremental copies of your hard drive or backup images of very critical / important files.

For let you know I tried to manage a backup of a Linux hard drive with Timeshift. It went good, but when I was restoring the image system was not so responsive and it was lacking some modules.

So I think is mandatory have a complete image of hard drive, with all your installed softwares and your importand data.

Talking about Linux think for example a broken update, the one which completly broke your system, maybe with broken greeter or lightdm, you won’t be able to enter in desktop anymore.

In this case possibles solutions are:

I’m talking about a full system disaster where you are neither able to login in desktop environment.

For just some critical files for example

crontaborfstabI think the best practice consist to have a backup copy of the singles important file, in case of broken file you can recover it from backup.But yes, with this practice you need to be constant with backup, at least one at week or better maybe by scheduling them.

I have multiple distributions installed on most of my systems and I also have multiple systems, multiple USB Flash Drives and a variety of methods and locations of backups so no matter whether I make a mistake or I have a flood or something like that I can recover.

I wrecked a multiple distribution system once when I mistakenly installed over the entire setup. Because of fairly recent flash drive copies it wasn’t more than ninety minutes and I had all systems installed. It was only a little bit longer and I had everything 100% back to normal, perhaps two hours from mistake to 100% normal and updated.

I remember in my young days, I was at my dad’s office computer, either DOS 6.1 or Windows 98. I was at the command prompt experimenting with some files or something. I am talking 30 years ago, so I don’t remember all the details. What I do remember I was in the C:\ drive or C:\Windows drive and I typed in del ., and pressed Enter then I froze. What the bleep!!!

Fortunately I didn’t do anything else, and the techs came got the computer and did a undelete with a recovery tool. Lesson well learned! I don’t think I ever used that command again.