Building an LLM-Optimized Linux Server on a Budget

As advancements in machine learning continue to accelerate and evolve, more individuals and small organizations are exploring how to run language models (LLMs) like DeepSeek, LLaMA, Qwen and others on their home servers. This article recommends a Linux server build that’s LLM-optimized for under $2,000 – a setup that rivals or beats pre-built solutions like Apple’s Mac Studio for cost and raw performance for LLM workloads.

Previously, we covered the step-by-step installation of DeepSeek and how to host it locally and privately. You can also use solutions like Jan (Cortex), LM Studio, llamafile and gpt4all. Regardless of what you are running, this article will help you build a Linux server that can run small to medium-sized LLMs.

LLM-Optimized Linux Server

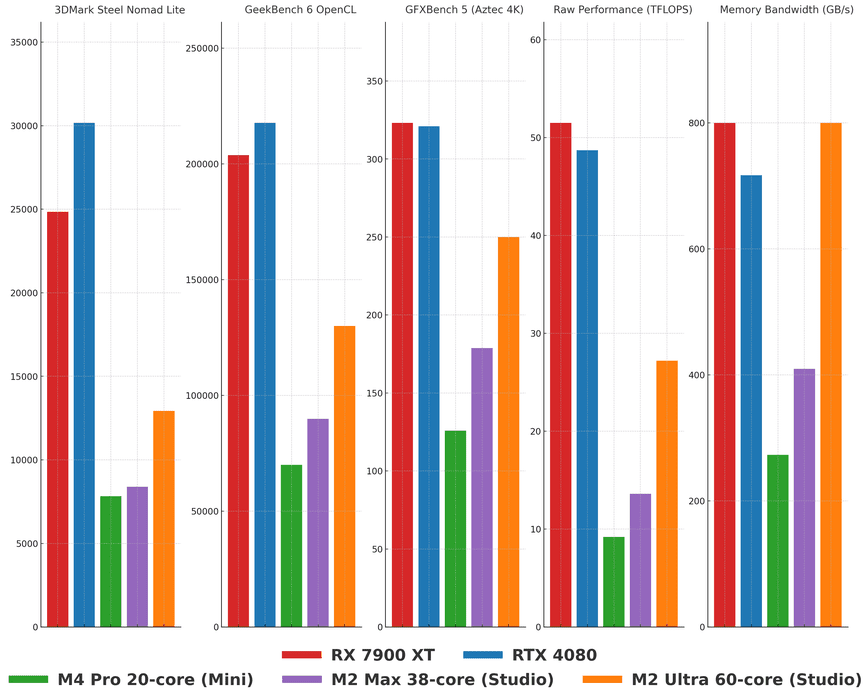

Benchmarks compiled by researching and pulling the numbers mainly from nanoreview and others.

This chart compares these GPUs across five benchmarks: 3DMark Steel Nomad Lite, GeekBench 6 OpenCL, GFXBench 5 (Aztec 4K), Raw Performance (TFLOPS), and Memory Bandwidth (GB/s).

- AMD RX 7900 XT (red): Killer performance across the board, best value.

- NVIDIA RTX 4080 (blue): Close to RX 7900 XT but pricier.

- M4 Pro 20-core (Mac Mini) (green): Low performance, low memory bandwidth.

- M2 Max 38-core (Mac Studio) (purple): Ok performance, but trails behind dedicated GPUs.

- M2 Ultra 60-core (Mac Studio) (orange): Great memory bandwidth, weaker raw performance and pricey.

This is where a custom-built Linux server comes in; with 128 GB of system DDR4 or DDR5 RAM and a powerful GPU with 20 GB of VRAM at a lower price. Sure, for larger models you will be forced to make use of the 128 GB of RAM, or, purchase one of multiple $5,000.00 GPUs. Instead, let’s take a look at two build options for around $2000.00, including a very capable sub $1000.00 AMD GPU!

The goal is to strike a balance between performance and cost and make sure the hardware can handle some heavy lifting of small to medium LLMs like DeepSeek 14b, 32b and 70b. Ok, now on to the good stuff, but first, please note the following:

1) For both builds below, ensure the motherboard BIOS is updated to the latest version to support the chosen CPU and RAM configurations. 2) High-capacity RAM configurations (128 GB) may require manual tuning for optimal stability, especially on DDR4 and DDR5. 3) The product links below are Amazon affiliate links, meaning we may earn a small commission if you purchase through them at no extra cost to you. 4) Manufacturer links were not included, as they tend to change frequently and often lead to broken URLs. This approach ensures you always have access to the latest pricing and availability. 5) Compare prices with bhphotovideo.com, newegg.com and eBay (be careful).

$2000 Build: 20 GB GPU, DDR4, and PCIe 4.0

PowerColor Hellhound Radeon RX 7900 XT GPU (20 GB) + Corsair 4000D Airflow Case.

Here’s the hardware config for the $2000 budget build:

- AMD Ryzen 9 5900X (12-Core Processor): Supports PCIe 4.0. ~ $300

- Cooler Master Hyper 212 Black Edition CPU Cooler: Budget friendly air cooling. ~ $30

- MSI MAG B550 Tomahawk Motherboard: PCIe 4.0 slots. ~ $120

- Corsair Vengeance LPX 128 GB DDR4-3600 RAM: Up to medium to large models when offloading to RAM. ~ $240

- TEAMGROUP MP44L 1 TB PCIe 4.0 NVMe SSD: Reliable storage with average NVMe speeds ~ $75

- PowerColor Hellhound Radeon RX 7900 XT GPU (20 GB): 20 GB of high VRAM bandwidth for model inference. ~ $900

- Corsair 4000D Airflow Case: Optimized for airflow, I own this case, it’s a solid value as well. ~ $100

- MSI MAG A1000GL Gaming Power Supply: Reliable power. ~ $200

$3000 Build: 24 GB GPU, DDR5, and PCIe 5.0

You may opt to spend a couple of hundred more on this more powerful build:

- AMD Ryzen 9 7900X (12-Core Processor): better single and multithreaded performance. Supports PCIe 5.0. ~ $500

- Cooler Master Hyper 212 Black Edition CPU Cooler: Budget friendly air cooling. ~ $30

- MSI PRO B650-S WIFI Motherboard: PCIe 5.0 slot for GPU. ~ $250

- Corsair Vengeance 128GB DDR5-5600 RAM: DDR5 has higher memory bandwidth than DDR4. ~ $420

- TEAMGROUP T-Force Cardea Z540 2TB PCIe 5.0 NVMe SSD: Faster, only if needed. ~ $150

- ASUS Dual Radeon RX 7900 XTX OC (24 GB): ~ $1400

- Corsair 4000D Airflow Case ~ $100

- MSI MAG 1250GL PCIE 5 – Reliable power. ~ $220

What about the Mac Mini or Mac Studio?

Apple’s hardware like the Mac Mini, Mac Studio and MacBooks use a unified memory architecture where the CPU and GPU share the same memory pool. This means the GPU can access the system RAM as needed. A Mac Mini with 64 GB of unified memory can allocate a significant portion for GPU tasks.

However, most consumer grade discrete GPUs with dedicated VRAM max out at ~ 24 GB. The NVIDIA RTX 4090 has 24 GB of VRAM but starts at $3,000!

To balance performance and cost, our build features a 20 GB GPU for about $1,000 and 128 GB of DDR4 RAM fully built (minus additional case fans and a few accessories) for around $2,000. 128 GB of unified memory in a Mac Studio would cost $5,000 to $10,000.

Notice also the PCIe 5 build above with features 128 GB of DDR5 RAM, providing even more memory bandwidth and even more performance for demanding workloads at still half the cost of a 128 GB Mac Studio.

The Mac Mini is a more affordable option at around $2,200 for the top 64 GB memory. Also, take a look at this Mac Mini LLM comparison YouTube video.

Now, I have to also mention that while these builds above are faster than even the Mac Studio M2 Ultra 60-core, the amount of electricity that the dedicated GPUs use will be significantly more, as much as 2x, or more! If electricity cost are high in your area, keep this in mind.

Update May 2nd 2025:

Up-to-Date GPU Recommendations

In the last few months, the NVIDIA RTX 4060 has emerged as the go-to budget GPU for LLM inference. Delivering 40+ tokens/sec on 7–8 B models with 70–90% utilization in Ollama benchmarks. If you can stretch your budget slightly, the newly released RTX 5060 Ti 16 GB offers about 24% more performance per dollar than a 4060 Ti, thanks to Blackwell-architecture gains. This makes it an attractive upgrade if you need extra throughput without jumping to the $700+ tier.

Multi-GPU & CPU-Only Inference Options

Beyond single-card setups, open-source engines like llama.cpp now include GGUF memory layouts and AVX-512 acceleration, pushing CPU-only inference on small models into the 10–20 tokens/sec range on modern x86 rigs.

For larger clusters, Comet’s tutorial shows how to repurpose crypto-mining hardware into a Kubernetes-backed LLM farm by using Docker, NCCL, and Horovod to shard 70 B-parameter models across multiple budget GPUs while keeping per-node costs under $1,000.

Conclusion

Building a custom Linux machine for LLM workloads is a smart move if you want performance and flexibility without the expense of pre-built options. Compared to the Mac Studio which can cost over $5,000 for 128 GB of unified memory, these Linux builds get you similar or more total memory and GPU for less than half the price.

With a $2,000 build you get a 20 GB GPU and 128 GB of DDR4 RAM and is suitable for small to medium-sized models like DeepSeek 14B, 32B and even 70B when offloading to RAM. The $3,000 build upgrades to DDR5, PCIe 5.0 and a 24 GB GPU and is even more memory bandwidth for heavy tasks—all for a fraction of the cost of a Mac.

You should also consider electricity costs, since powerful dedicated GPUs can consume a lot more power compared to Apple’s optimized chips. If power efficiency is a concern in your area, this should be factored in.

Overall, this is a powerful, scalable and budget friendly alternative to cloud services and pre-built machines. Whether you’re experimenting with LLMs locally, running inference or fine-tuning models, a well optimized Linux machine gives you control and performance at a relatively low cost.

Great article.

Typo at the bottom; M4 → M2.

I think it would be worth mentioning that the M4 Max is faster than the M2 Ultra with local LLM even though it has less memory bandwidth. Definitely quite excited for the M4 Ultra to come around; its local LLM performance will probably match or be close to a 4090 I’d imagine.

Framework’s new desktop launch has an AMD chip with unified memory up to 128GB (110GB GPU usable on Linux) for ~$2000. It only has 256Gb/s memory bandwidth though.

@Relearn4687 welcome to the community. I’ll make that correction. The first 5 comments are also visible from the blog post front page so your comments will be useful to future readers. Thanks!

The first 5 comments are also visible from the blog post front page so your comments will be useful to future readers. Thanks!