I was wrong! zswap IS better than zram

Rule of thumb / TL;DR:

If your system only uses swap occasionally and keeping swap demand within ~20–30% of your physical RAM as zram is enough, ZRAM is the simpler and more effective option. But if swap use regularly pushes far beyond that, is unpredictable, or if your system has fast storage (NVMe), Zswap is the better choice. It dynamically compresses and caches hot pages in RAM, evicts cold ones to disk swap, and delivers smoother performance under heavy pressure.

For years I’ve championed zram as the best swap‑related feature you could enable on a Linux desktop. When your system starts paging out memory, traditional swap writes whole pages to disk, which is painfully slow compared to RAM. Back in 2017 through 2020, I wrote several posts that explained how to configure zram on servers, desktops, and even Raspberry Pi boards.

After removing ZRAM and just using a 32 GB swap file

Although zram had been promoted out of the kernel’s staging area in 2014, most mainstream Linux distributions did not enable it by default until around the 2020s. You still had to load the zram module and set up the block device yourself using tools like zram-config or zram-tools. Those posts walked readers through exactly those steps.

By compressing memory pages and storing them in a block device in RAM, zram provided me the breathing room I needed on many systems low on free memory. For servers with slow-spinning hard disks (HDDs), desktops with budget or older model SSDs, enabling ZRAM felt like magic.

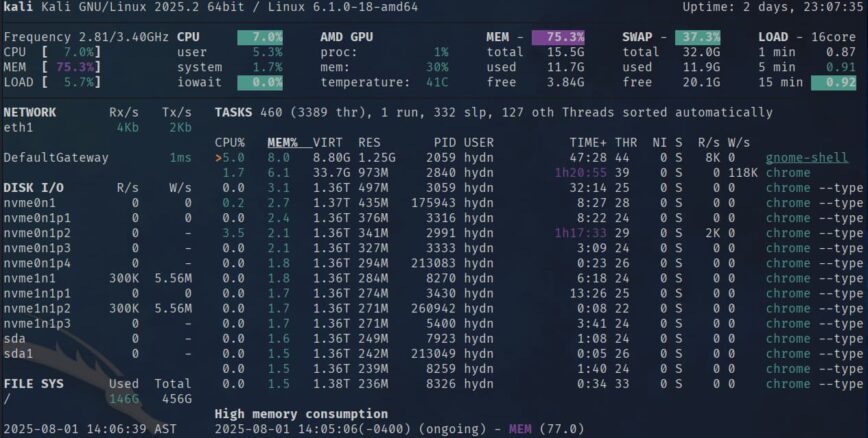

Fast‑forward a few years. My daily workstation still has only 16 GB of RAM, but my workloads have grown. Running virtual machines, dozens of browser tabs split across multiple workspaces, containers, editors, Remmina, Termius, Gimp, Spotify, etc., means I routinely chew through 20 GB of memory. Back in July 2023, I upgraded the storage on my desktop and laptop to very fast Hynix NVMe SSDs [Amazon affiliate link].

In that context, my old love affair with zram started to show cracks. After some experimentation and a lot of research, I’ve concluded that zswap is often a better choice than zram on modern desktops. This post revisits my earlier articles, explains why zswap may outperform zram, and shares the lessons learned from a few years of real‑world use.

Revisiting zram: why I loved it

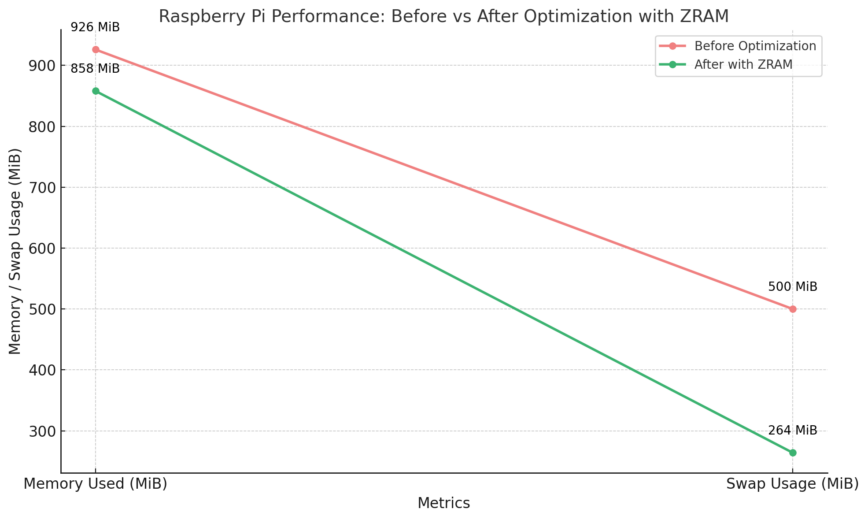

Screenshot from the article: Raspberry Pi Performance: Add ZRAM and These Kernel Parameters

To understand why I once pushed zram so hard, it helps to recall what it does and why it made sense then. The Linux kernel includes a zram module, but it is not enabled or configured automatically by most distributions. When you load it and initialize a block device with zramctl, it creates a compressed block device in RAM that you can format like a disk.

When you use that device as swap, pages being swapped out are compressed to about 50% to 30% of their original size before being stored in memory (RAM) instead of being written to disk. Because the device resides in RAM, accessing it is much faster than reading from or writing to a physical drive. The Linux kernel documentation describes zram as creating “RAM‑based block devices… pages written to these disks are compressed and stored in memory itself”. This reduces disk wear and can significantly increase effective memory capacity.

In my earlier posts I recommended allocating zram at roughly 25% of your physical RAM. On a 16 GB system, using a 4 GB zram block with a typical 2:1 compression ratio gave me about 8 GB of compressed swap capacity. Together with the remaining 12 GB of RAM, that setup effectively provided 20 GB of usable memory.

When running my workloads on slow SSDs, the machine felt responsive despite heavy swapping. In those posts I stressed that zram is particularly attractive when you don’t have a disk‑backed swap device, because it can be set up entirely in memory and does not require any preexisting partition.

If you want a refresher on how to do that, see my 2017 guide, Running Out of RAM on Linux? Add Zram Before Upgrading!, which walks through installing the zram-tools package, sizing the device (typically 20–30% of RAM), and tuning vm.swappiness and vfs_cache_pressure. Those earlier posts show why zram felt like magic on slow disks and why 25% was a sweet spot for many systems.

Where zram started to fall apart

Screenshot from the article: Running Out of RAM on Linux? Add Zram Before Upgrading!

The honeymoon with zram ended when my workloads grew beyond a few gigabytes of swap usage. I eventually found myself using more than 10 GB of swap routinely on top of 16 GB of physical memory. Clear case of buying more RAM, right? Well, I don’t plan to invest any more in this rig. I would like to build a new rig next year and prefer to put 32 GB of RAM there. So until then I need my workstation to survive my workload.

Following my earlier formula, I bumped the zram device to 8 GB (50% of RAM), which, with a ~2:1 compression ratio, could offer up to 16 GB of compressed swap. Unlike a real disk partition, zram doesn’t reserve that memory up front. However, under my heavy workloads, the device eventually filled, meaning about 8 GB of physical RAM was occupied by compressed swap. That left only 8 GB for the working set and caches, leading to memory pressure sooner than before. When zram filled and the kernel had to spill to disk, performance nosedived.

Note: In my earlier zram guide:

- I suggested

vm.swappiness=50and explained how it balances swapping and caching on a desktop. When you switch to zswap, you may choose to leave swappiness closer to the default (60–100) or even raise it if zswap’s compression makes swap I/O cheap. See my Part 2 article on zram for the rationale behind those settings at the time. - I also recommended (and still recommend) limiting zram to roughly 20–30% of RAM. My 8 GB test was a deliberate stress test as to what happens if I allocate AND, more importantly, make use of, half my RAM for Zram? As the kernel documentation warns, zram’s scalability is bounded by available memory and CPU. When you push it too far, you risk starving the rest of the system.

I also ran into an edge case: with zram occupying half of my RAM, suspending the system to RAM (sleep) and resuming from sleep occasionally caused complete lockups. While there isn’t an official bug report that I can point to, the kernel documentation warns that zram has limited scalability because the compressed device is limited by available RAM and incurs CPU overhead for compression/decompression.

In short, allocating too much of your memory to a fixed zram device can starve the system of the working memory it needs to handle suspend/resume, I/O, file caches, and other kernel tasks.

Comparing zram and zswap

The best way to summarize the trade‑offs is to lay out the facts side by side. Both technologies trade CPU time for reduced disk I/O. If your workload rarely touches swap or your swap needs are comfortably served by compressing a few gigabytes, zram is an elegant, easy solution. But when swap usage is large and unpredictable, or you have a fast NVMe drive, zswap’s write‑behind cache and dynamic pool offer a smoother experience.

ZRAM vs. Zswap Comparison

ZRAM and Zswap sizing quick reference (16 GB RAM system)

Notes

- ZRAM has a fixed uncompressed cap equal to its disksize. RAM is consumed on demand as pages are compressed into the device.

- ZSWAP grows on demand up to its pool cap; once full, it begins evicting to the backing swap device.

Why I now prefer zswap

So why the title of this post? Simply put, my real‑world needs and hardware have changed. For example, in more recent years, all the systems I work with have fast storage, SSDs, or better. In addition, here is more reasoning on why I flipped my stance:

- Swap demand beyond what zram can comfortably handle: My workstation often uses 10–20 GB of swap. Allocating a fixed zram device big enough to absorb that would mean dedicating half of my RAM to compressed swap, leaving too little for everything else. Zswap lets me use only as much RAM as needed for the cache and gracefully spills to my NVMe swap file when the working set grows.

- NVMe swap is fast: As I mentioned, with an NVMe drive, the latency penalty of writing pages to disk is tiny compared to HDDs or older SATA SSDs. Zswap still reduces writes by caching compressible pages, but if a page is incompressible or the cache is full, writing it to NVMe is not the nightmare it once was.

- Better behavior during suspend/resume: Without a giant pre‑allocated zram device, the kernel has more free memory for device drivers and kernel work queues during suspend and resume. In my tests the system no longer hangs when waking from sleep.

- Simpler management: Enabling zswap is a matter of setting zswap.enabled=1 at boot. No need to create and format block devices or fiddle with priorities. You can adjust the pool size and compression algorithm through sysfs on the fly.

- Reduced risk of LRU inversion: Because zswap evicts the least recently used compressed pages to disk and keeps the most recent pages in RAM, it avoids the scenario where old data sits in fast memory while new pages go to slow storage. This makes swap behavior more predictable when the workload changes.

Situations where Zram still shines

I’m not declaring zram obsolete. There are scenarios where zram remains the right tool:

- Small systems with no swap device: Many embedded boards, Raspberry Pi boards, and VMs don’t have swap partitions and sometimes run on SD cards or flash with limited write endurance. In those cases, zram provides fast, wear‑free swap right out of RAM. Several of my earlier articles targeted these devices, and the advice still stands.

- Systems with extremely slow storage: If your only storage is a 5400 rpm HDD or old/slow SATA SSD, the cost of writing uncompressed pages to disk is high. Zram can avoid those writes entirely until the compressed device is full. On a system with 2–4 GB of RAM that seldom swaps more than a gigabyte or two, zram will feel snappier than zswap.

- Transient workloads needing a temporary RAM disk: Because zram creates block devices, you can format them as ext4 or XFS and use them as /tmp or /var/cache to speed up builds and tests. The kernel docs also point this out.

Lessons learned and final setup

So what’s my current configuration? I’ve disabled zram entirely on my desktop. My /etc/default/grub includes zswap.enabled=1 zswap.compressor=lzo zswap.max_pool_percent=25. I reduced my 32 GB swap file on my NVMe drive to 16 GB. The system remains responsive even under heavy loads and hasn’t frozen during suspend/resume since making the change.

For servers I continue to deploy zram, but only at 20% of RAM. If a server’s workload pushes beyond that, it is usually a sign that I need to add more physical memory or tune applications. It goes without saying, but, under no circumstances should we allocate 50% of RAM to zram.

Conclusion

Almost 3 years ago, when I built my workstation/gaming rig, I started with the default 1 GB of disk swap that came with the system, then moved to ZRAM. At first, it gave me noticeable performance improvements, but as my memory usage grew and I kept increasing the percentage of RAM allocated to ZRAM, performance started to suffer. Especially with the bug that prevented resuming from sleep. My NVMe originally was a budget-purchase that was about 1/3 of the speed of my current NVMe.

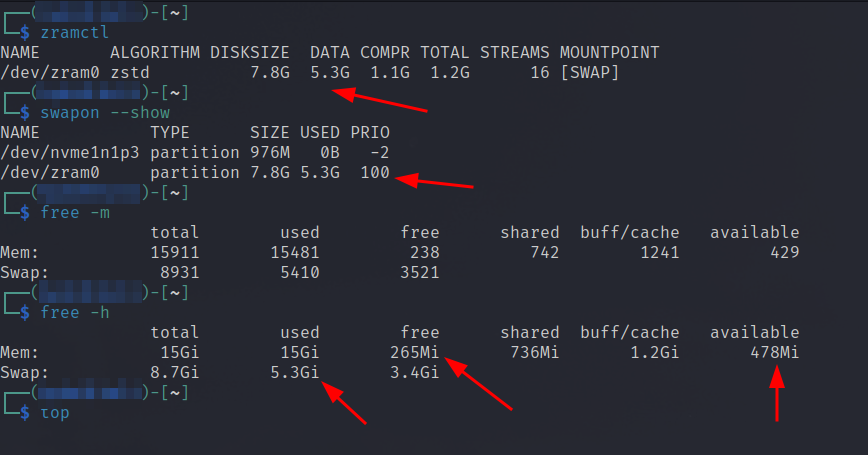

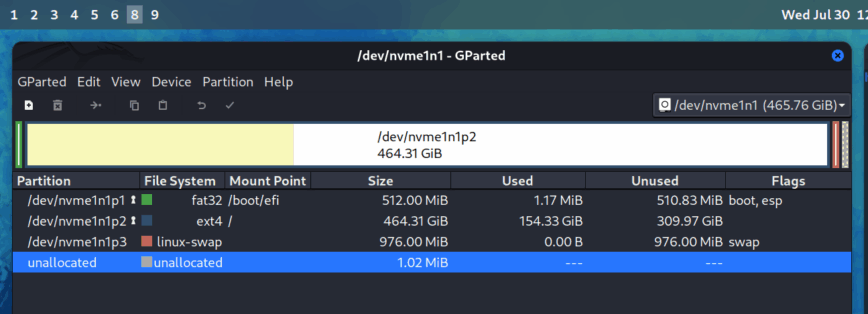

My next step was to disable ZRAM entirely and create a large 32 GB swap file. With plenty of free disk space and a fast NVMe, performance stayed smooth even under heavy memory pressure. I ran like this for a few months, no issues.

The only reason I’ve now enabled Zswap is to reduce unnecessary NVMe writes/wear due to ongoing heavily used swap. On a fast NVMe drive, the benefit is more about longevity and best practices, than responsiveness. Overall, it was a lesson learned that ZRAM wasn’t as fit for this workload, or for many others, as I once believed. Today, slow storage devices are mostly found in Raspberry Pi boards and other budget devices, since slow storage is less common on everyday desktop and server systems.

Changing one’s mind can be humbling, especially after writing a string of blog posts proclaiming something as the “best.” However, those articles are almost a decade old, and a lot has changed since then, with my distros including Zram and Zswap by default. The Linux kernel now offers multiple memory compression tools, and the right one depends on your hardware and workload. Unlike a decade ago. Zstd especially, has become a sensible staple.

My early enthusiasm for zram came from running resource‑constrained systems and hardware with slow storage. It remains a fantastic solution for that scenario. But on a modern system, zswap’s dynamic compressed cache provides smoother performance, better suspension behavior, and less tinkering.

Don’t be afraid to experiment with both; monitor your swap usage (swapon –show, free -h), try different pool sizes and compressors, and—most importantly—don’t forget the easiest fix of all: adding more RAM.

EDIT: If you’re curious about how my thinking evolved, refer to the original series starting in 2017 with Linux Performance: Almost Always Add Swap Space, then follow up with ‘Part 2: ZRAM, and Part 3: No Swap Space. Those posts and also the discussions in our Linux forums show how zram, zswap, and even swap‑free setups each have their place depending on workloads and hardware. Also read Increase the Performance and Lifespan of SSDs & SD Cards. All these are old articles that I try to update yearly to ensure the most accurate recommendations.

This article hopefully serves to balance the recommendation between both ZRAM and Zswap. At the very least, remove some of my previous bias.

The community discussion this was based on can be followed here:

As well as the related forum topics below the comments.

Quick reference for the settings and outcomes when using Zram and Zswap:

Notes

• ZRAM has a fixed uncompressed cap equal to its disksize. Actual RAM used by zram is allocated dynamically as pages are compressed and swapped out, not all at once when the device is initialized.

• ZSWAP also grows on demand up to its pool cap. When full, it starts evicting to the backing swap device.

Nice to see this empirical comparison. I haven’t dug into swapping alternatives much because it’s been an extremely rare event that any of my systems swap, especially the most current ones. It may be because I seldom run enough software concurrently to exceed the actual amount of real memory present, so the most that happens is that context switching alters which processes are active, but all in memory. On my favorite system i do allocate an ordinary swap file; I’ve never seen it active; still, these statistics are useful and undoubtedly valid for any system that’s running a lot of software, whether a laptop, desktop, or server.

Thanks for the details and for your honest assessment in the comparison of swapping techniques and technologies. I’ll definitely keep this in mind, especially if any inefficient use of resources is detected.

speaking of bursty workloads, on an older minipc setup as a proxmox server, so also using zfs - also this was right after debian adopted tmpfs for tmp - running a handful of vm’s, docker, and lxc’s, ram was often showing 75% usage so i decided to tune it. pretty sure i was using gary explain’s config:

and afterwards i tweaked it to

this was before i upped percent to 200%, swappiness to 200 priority to 32000

and with

stress-ng --vm 4 --vm-bytes 12G -t 60sand i hovered around 6g in zram

and my arc stayed pristine

this proxmox node - a 6700 w 16g ddr4 - was running running opnsense my router at the time and 0 noticable impact DURING the stress test. honestly i was pretty afraid to even throw 12g extra at it bc it was already reporting using 12g during normal operation. still torn on lz4 vs zstd

i dunno im not trying to claim zram is better - i came across this blogpost in the context that im demoing a new cheap vps that wont allow any kernel configs so im looking into a swapfile. thanks for the writeup

Welcome to the forums @wommy

What you added is a genuinely useful reference for anyone tuning zram more aggressively, especially in mixed workloads with ZFS, VMs, or bursty memory pressure.

To answer your questions directly:

At the time, zswap ended up being the more straightforward option for my needs.

That said, the configuration you shared here is precisely the kind of practical, real-world tuning that readers benefit from.

The ARC stats holding steady under stress are particularly interesting, and your notes around swappiness, page-cluster, and watermark tuning add important context that goes beyond most zram discussions.

Thanks again for contributing this.