How To Create Optimized Docker Images For Production

Optimizing Docker images is a tricky feat. You have a lot to learn, with numerous things that can go wrong. Ask Matthew McConaughey from Interstellar.

“Anything that can go wrong, will go wrong.”

– Murphy, a smart dude

You want to have a quick feedback loop between pushing code to your GitHub repository and deploying it to a production environment. You don’t want to send gigabyte-sized Docker images across the network. Sending huge images will cost you money and time.

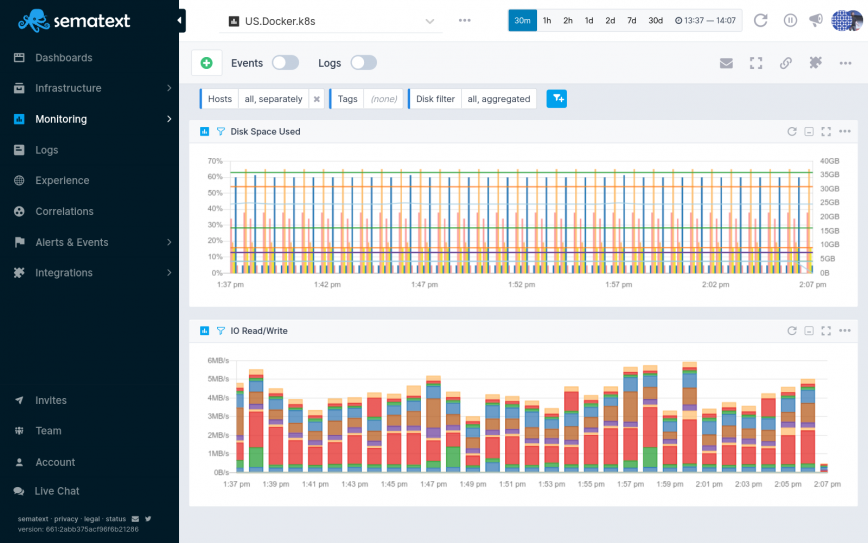

They can also cause stress if they’re poorly monitored. What if a big package sneaks in or old images start accumulating?

Make sure to monitor your containers to watch for spikes is disk usage and don’t be shy with the Docker system prune command. It will clean up any old images you have.

In this article I want to explain how to make your Docker images smaller, drastically cutting down build time and minimizing image size.

In the end, you’ll know how to use tiny base images, like the Alpine distro, to reduce the initial size of your images. But also how to use the multi-stage build process to exclude unnecessary files.

Here’s a quick agenda of what you’ll learn:

- Why create optimized Docker images?

- How to create optimized Docker images?

- Using base Ubuntu images

- Using base Alpine images

- Excluding build tools by using multi-stage builds

- CI/CD pipeline optimization

Why create optimized Docker images?

Build times are longer if you have large Docker images. It also affects the time it takes to send images between CI/CD pipelines, container repositories, and cloud providers.

Let’s say you have a huge, 1GB image. Every time you push a change and trigger a build, it will create huge stress on your network and make your CI/CD pipeline sluggish. All of this costs you time, resources, and money. Sending data over the network in not cheap in the cloud. Cloud providers like AWS are billing you for every byte you send.

One important point to consider as well is security. Keeping images tiny reduces the attack vector for potential attacks. Smaller images equal smaller attack surface, because they have fewer installed packages.

These are all reasons why Docker images you want in production should only have the bare necessities installed.

How to create optimized Docker images?

You have different approaches to decrease the size of Docker images. When thinking about production environments you usually don’t need any build tools. You can run apps without them, so there’s no need to add them at all.

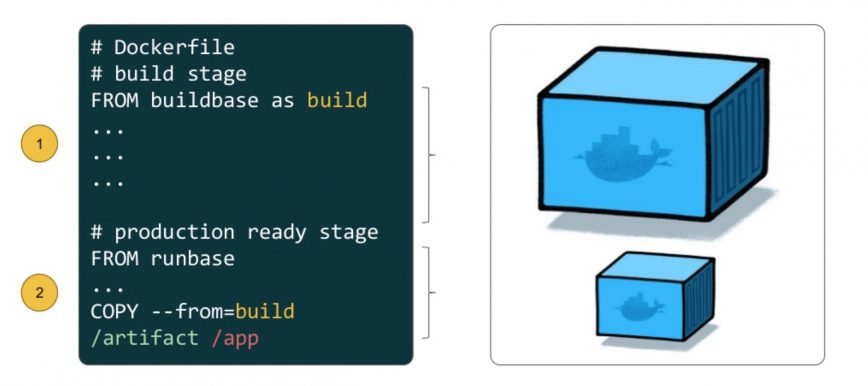

The multi-stage build process lets you use intermediate images while building your app. You can build the code and install dependencies in one image, then copy over the final version of your app to an empty image without any build tools.

Using tiny base images is another key factor. Alpine Linux is a tiny distribution that only has the bare necessities for your app to run.

Using base Ubuntu images

Using the base Ubuntu image is natural when you start out with Docker. You install packages and modules you need to run your app.

Here’s a sample Dockerfile for Ubuntu. Let’s see how this affects build time.

# Take a useful base image

FROM ubuntu:18.04

# Install build tools

RUN install build-tools

# Set a work directory

WORKDIR /app

# Copy the app files to the work directory

COPY . .

# Build the app

RUN build app-executable

# Run the app

CMD ["./app"]You run the build command to create a Docker image.

docker build -f Dockerfile -t ubuntu .No matter how fast your connection is, it usually takes a while. The final size of the image is rather large. After running docker images you’ll see:

[output] ubuntu latest fce3547cf981 X seconds ago 1GB

I don’t know about you, but anything close to 1GB for a basic Docker image is a bit too much for my taste. This may vary based on the build tools and runtime you’re using.

Using base Alpine images

Using smaller base images is the easiest way to optimize your Docker images. The Alpine distro is an awesome lightweight image you can use to cut down the size and build time of your images. Let me show you.

If you edit the Dockerfile a bit to use Alpine, you can see a huge improvement right away.

# Take a tiny Alpine image

FROM alpine:3.8

# Install build tools

RUN install build-tools

# Set a work directory

WORKDIR /app

# Copy the app files to the work directory

COPY . .

# Build the app

RUN build app-executable

# Run the app

CMD ["./app"]Only by changing the FROM line you can specify a tiny base image. Run the build once again.

docker build -f Dockerfile -t alpine .Care to guess the size?

docker imagesIt varies based on the runtime, but only reaches approximately 250MB.

[output] nodejs 10-alpine d7c77d094be1 X seconds ago 71MB golang 1.10-alpine3.8 ba0866875114 X seconds ago 258MB

This is a huge improvement. Just by changing one line you can cut down the size by over 800 MB. Let’s take it one step further and use multi-stage builds.

Excluding build tools by using multi-stage builds

Images you want to run in production shouldn’t have any build tools. The same logic applies to redundant dependencies. Exclude everything you don’t need from the image. Only add the bare necessities for it to run.

Doing this with multi-stage builds is simple. The logic behind it is to build the executable in one image that contains build tools. Then move it to a blank image that does not have any unnecessary dependencies.

Edit the Dockerfile again, but this time add a second FROM statement. You can build two images in one Dockerfile. The first one will act as the build, while the second will be used to run the executable.

# Take a useful base image

FROM ubuntu:18.04 AS build

# Install build tools

RUN install build-tools

# Set a build directory

WORKDIR /build

# Copy the app files to the work directory

COPY . .

# Build the app

RUN build app-executable

####################

# Take a tiny Alpine image

FROM alpine:3.8

# Copy the executable from the first image to the second image

COPY --from=build /build .

# Run the app

CMD ["./app"]Build the image once again.

docker build -f Dockerfile -t multistage .Care to guess the size now?

docker imagesBy using multi-stage builds you will see two images. The

[output] multistage golang 82fc005abc40 X seconds ago 11.3MB multistage nodejs 4aadd10d2711 X seconds ago 61.9MB <none> <none> d7855c8f8280 X seconds ago 1GB

The <none> image is built with the FROM ubuntu:18.04 command. It acts as an intermediary to build and compile the application, while the multistage image in this context is the final image which only contains the executable.

From an initial 1GB, down to an image size of 11MB, multi-stage builds are awesome! Cutting down the size by over 900MB is something to be proud of. This size difference will definitely improve your production environment in the long run.

CI/CD pipeline optimization

Why is all this important? You don’t want to push billions of bytes around the network. Docker images should be as tiny as possible because you want deployments to be lightning fast. By following these simple steps, you’ll have an optimized CI/CD pipeline in no time.

At Sematext we follow these guidelines and use multi-stage builds in our build pipeline. When you cut down the time between builds you improve developer productivity. This is the end goal. Keeping everybody happy and enjoying what they do. We even use our own product to monitor our Kubernetes cluster and CI/CD pipeline. Having insight into every container in the cluster and how they behave while the CI/CD pipeline is updating them is pretty sweet.

In the end it’s all about time and money. You don’t want to waste time on building and sending images between clusters and cloud providers. This costs money. Yeah, transfer fees are a thing. You have to pay for the throughput you create in the network.

If you have an almost gigabyte-sized image to push every time you trigger a build, that’ll add up. It won’t be pretty, that’s a fact. Sematext has clusters across regions. Hear it from someone who’s had that headache before. Data transfer fees come around to bite you!

Why should you start using multi-stage builds?

In the end, keep it simple. Only install bare necessities in your Docker images. Images suited for production tend not to need any build tools to run the application. Hence, there’s no need to add them at all. Use multi-stage builds and exclude them whenever you can.

You can apply this to any language. Even interpreted languages like Node.js and Python. You use intermediate images to build the code and install dependencies. Not until then do you copy over the final version of your application to an empty image with a tiny base, like Alpine. Once you start using multi-stage builds, you’ll have a hard time stopping. It’s that awesome!

I had a blast writing this article, hope you peeps enjoyed it! If you have any questions or just want to have a chat about DevOps, get in touch with me on Twitter at @adnanrahic or head over to our website and use the live chat.

—– —– —– —– —– —– —– —– —– —– —–

About Sematext

Sematext, a global products and services company, runs Sematext Cloud – infrastructure and application performance monitoring and log management solution that gives businesses full-stack visibility by exposing logs, metrics, and events through a single Cloud or On Premise solution. Sematext also provides Consulting, Training, and Production Support for Elasticsearch, the ELK/Elastic Stack, and Apache Solr. For more information, visit sematext.com.

About the author

Adnan Rahić is a JavaScript developer at heart. Startup founder, author and teacher with a passion for DevOps and Serverless. As of late focusing on product growth and developer advocacy.